AMD, ARM-vendors and Intel have been busy unifying CPU and GPU memories for years. It is not easy to design a model where 2 (or more) processors can access memory without dead-locking each other.

NVIDIA just announced CUDA 6 and to my surprise includes “Unified Memory”. Am missing something completely, or did they just pass their competitors as it implies one memory? The answer is in their definition:

Unified Memory — Simplifies programming by enabling applications to access CPU and GPU memory without the need to manually copy data from one to the other, and makes it easier to add support for GPU acceleration in a wide range of programming languages.

The official definition is:

Unified Memory Access (UMA) is a shared memory architecture used in parallel computers. All the processors in the UMA model share the physical memory uniformly. In a UMA architecture, access time to a memory location is independent of which processor makes the request or which memory chip contains the transferred data.

See the difference?

See the difference?

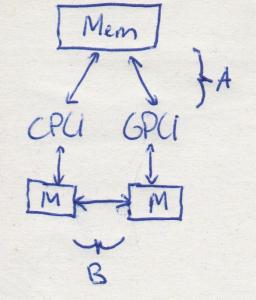

The image at the right explains it differently. A) is how UMA is officially defined, and B is how NVIDIA has redefined it.

So NVIDIA’s Unified Memory solution is engineered by marketeers, not by hardware engineers. On Twitter, I seem not to be the only one who had the need to explain that it is different from the terminology the other hardware-designers have been using.

So if it is not unified memory, what is it?

It is intelligent synchronisation between CPU and GPU-memory. The real question is what the difference is between Unified Virtual Addressing (UVA, introduced in CUDA 4) and this new thing.

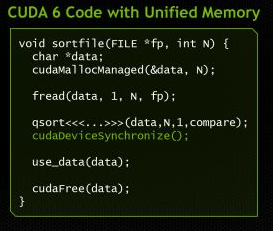

UVA defines a single Address Space, where CUDA takes care of the synchronisation when the addresses are physically not on the same memory space. The developer has to give ownership to or the CPU or the GPU, so CUDA knows when to sync memories. It does need CudeDeviceSynchronize() to trigger synchronisation (see image).

From AnandTech, which wrote about Unified (virtual) Memory:

This in turn is intended to make CUDA programming more accessible to wider audiences that may not have been interested in doing their own memory management, or even just freeing up existing CUDA developers from having to do it in the future, speeding up code development.

So its to attract new developers, and then later taking care of them being bad programmers? I cannot agree, even if it makes GPU-programming popular – I don’t bike on highways.

From Phoronix, which discussed the changes of NVIDIA Linux driver 331.17:

The new NVIDIA Unified Kernel Memory module is a new kernel module for a Unified Memory feature to be exposed by an upcoming release of NVIDIA’s CUDA. The new module is nvidia-uvm.ko and will allow for a unified memory space between the GPU and system RAM.

So it is UVM 2.0, but without any API-changes. That’s clear then. It simply matters a lot if it’s true or virtual, and I really don’t understand why NVIDIA chose to obfuscate these matters.

In OpenCL this has to be done explicitly with mapping and unmapping pinned memory, but is very comparable to what UVM does. I do think UVM is a cleaner API.

Let me know what you think. If you have additional information, I’m happy to add this.

I think you misunderstand what CUDA does here. They did not state to have solved the UMA challenge but rather offer an easier way for programmers to do their work without having to _manually_ take care of the transfers to and from the GPU memory

I’m very aware what NVIDIA is doing: using the wrong terminology. Probably on purpose. I do like their technique, but it’s called something like “intelligent synchronisation”, not “unified memory”.

I know what you’re thinking, but they did not use the term “access” just to avoid such misconceptions. their solution only suggest there is a Unified (not Uniform) concept of memory now, where coders do not need to take care of these back and forth memory transfers… and btw, do you you know how hard is to coin new terms nowadays? 🙂

Sure. 🙂 I rest my case.

I’ve added some explanation on what it is (for what I’ve discovered till now).

Oh God.

http://devblogs.nvidia.com/parallelforall/unified-memory-in-cuda-6/

See also the discussion at Reddit: http://www.reddit.com/r/gpgpu/comments/1qr4aw/how_nvidia_marketed_unified_virtual_addressing/

Ditto to what studus says. What you have under “official definition” above seems to actually be for “Uniform Memory Access”. But CUDA uses the term “Unified Memory”. These are different terms, so I don’t think it’s super misleading on nVidia’s part — I have never seen nVidia actually use the term UMA with this. Though I agree UVM would have been a better term than UM.

Also, regarding UM vs UVA:

UVA: As far as I know, this does not actually do any automatic block copies. With UVA, the same type of pointer can reference memory on CPU or any of several GPUs. But my understanding is when you, say, read CPU memory using UVA from a GPU kernel, it does not automatically copy a block of data from CPU memory to GPU global memory. It only reads the requested data from CPU memory over the PCIe bus into your GPU registers or local memory. From nVidia: “Zero-Copy [with UVA] provides some of the convenience of Unified Memory, but none of the performance, because it is always accessed with PCI-Express’s low bandwidth and high latency.”

UM: This seems to automatically copy pages of memory between CPU and GPU global memory as needed. In other words, CPU memory is paged to the GPU, and when there is a page fault, another page of CPU memory is copied into the GPU memory. It seems like compared to just UVA, this could be much cleaner to exploit data locality to get high performance on data that is much bigger than GPU memory. Though with any tool it carries risks.

Got a reply from an Nvidia employee: it actually is supported on Tegra K1.

I disagree with your definitions, as they describe *virtual* systems (solved in software) and not real unified/shared memory systems (solved in hardware) – this is exactly what Nvidia mixes up. The two virtual methods you described are UVA + explicit copy and UVA + implicit data-copies.

Why is Nvidia mixing up virtual and real? They are getting competition from AMD’s APUs and Intel’s Ivy Bridge, two SoC-architectures with both the GFLOPS and real unified memory. It is in their interest to mix things up, so the marketing of these two competitors is weakened.

Problem for me is that Nvidia is a King Of Marketing. As I like to keep people well-informed and thus write articles as this one, it gives me the image of an anti-Nvidia guy. I love their hardware, dislike their marketing.

UVM is the internal term used by NVIDIA.

Am totally confused what all of you want to say?

That NVidia’s “Unified Memory” is in fact “Unified Virtual Memory”.