The research lab LaSEEB (Lisboa, Portugal) is active in the areas of Biomedical Engineering, Computational Intelligence and Evolutionary Systems. They create software using OpenCL and CUDA to speed-up their research and simulations.

The research lab LaSEEB (Lisboa, Portugal) is active in the areas of Biomedical Engineering, Computational Intelligence and Evolutionary Systems. They create software using OpenCL and CUDA to speed-up their research and simulations.

They were one of the first groups to try out OpenCL, even before StreamHPC existed. To simplify the research at the lab, Nuno Fachada created cf4ocl – a C Framework for OpenCL. During an e-mail correspondence with Nuno, I asked to tell something about how OpenCL was used within their lab. He was happy to share a short story.

We started working with OpenCL since early 2009. We were working with CUDA for a few months, but because OpenCL was to be adopted by all platforms, we switched right away. At the time we used it with NVidia GPUs and PS3’s, but had many problems because of the verbosity of its C API. There were few wrappers, and those that existed weren’t exactly stable or properly documented. Adding to this, the first OpenCL implementations had some bugs, which didn’t help. Some people in the lab gave up using OpenCL because of these initial problems.

All who started early on, recognises the problems described above. Now fast-forward to now.

Nowadays its much easier to use, due to the stability of the implementations, and the several wrappers for different programming languages. For C however, I think cf4ocl is the most complete and well documented. The C people here in the lab are getting into OpenCL again due to it (the Python guys were already into it again due to the excellent PyOpenCL library). Nowadays we’re using OpenCL for AMD and NVidia GPUs and multicore CPUs, including some Xeons.

This is what I hear more often lately: the return of OpenCL to research labs and companies, after a several years of CUDA. It’s a combination of preparing for the long-term, growing interest in exploring other and/or cheaper(!) accelerators than NVIDIA’s GPUs, and OpenCL being ready for prime-time.

♦

Do you have your story of how OpenCL is used within your lab or company? Just contact us.

Are you interested in other wrappers for OpenCL, See this list on our knowledge base.

Altera has just released the free ebook FPGAs for dummies. One part of the book is devoted to OpenCL, so we’ll quote some extracts here from one of the chapters. The rest of the book is worth a read, so if you want to check the rest of the text, just

Altera has just released the free ebook FPGAs for dummies. One part of the book is devoted to OpenCL, so we’ll quote some extracts here from one of the chapters. The rest of the book is worth a read, so if you want to check the rest of the text, just

When copying data from global to local memory, you often see code like below (1D data):

When copying data from global to local memory, you often see code like below (1D data):

So, for this year it will be K40. Here’s an overview:

So, for this year it will be K40. Here’s an overview:

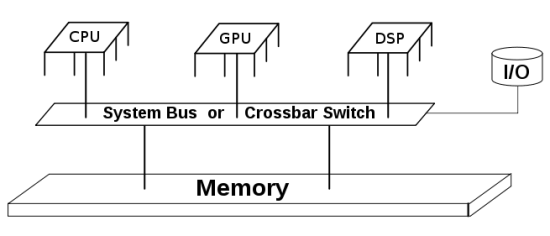

At Intel they have CPUs (Xeon, Ivy Bridge), GPUs (Isis) and Accelerators (Xeon Phi). OpenCL enables each processor to be used to the fullest and they now promote it as such. Watch the below video and see their view on why OpenCL makes a difference for Intel’s customers.

At Intel they have CPUs (Xeon, Ivy Bridge), GPUs (Isis) and Accelerators (Xeon Phi). OpenCL enables each processor to be used to the fullest and they now promote it as such. Watch the below video and see their view on why OpenCL makes a difference for Intel’s customers.

Khronos just announced three OpenCL based releases:

Khronos just announced three OpenCL based releases:

On 15 – 17 April 2014 a 3-day workshop around HPC is organised. It is free, and focuses on bringing industry and academy together.

On 15 – 17 April 2014 a 3-day workshop around HPC is organised. It is free, and focuses on bringing industry and academy together.

According to the

According to the